Framing Generative AI applications as tools for

cognition in education

Enmarcando las aplicaciones de IA

generativa como herramientas para la cognición en educación

Dr. Marc

Fuertes-Alpiste. Profesor agregado. Universitat de

Barcelona. España

Dr. Marc

Fuertes-Alpiste. Profesor agregado. Universitat de

Barcelona. España

Recibido:

2024/04/06 Revisado 2024/04/29 Aceptado: :2024/07/01 Online First: 2024/07/05 Publicado: 2024/09/01

Cómo citar este artículo:

Fuertes

Alpiste, M. (2024). Enmarcando las aplicaciones de IA generativa como

herramientas para la cognición en educación [Framing

Generative AI applications as tools

for cognition in education]. Pixel-Bit. Revista De Medios Y Educación, 71,

42–57. https://doi.org/10.12795/pixelbit.107697

ABSTRACT

Generative AI applications

enable different useful functions for learning based on the generation of

content. This paper aims to offer a theoretical framework to understand them as

tools for cognition (TFC), framed in the perspective of sociocultural theory

and activity theory and distributed cognition. This perspective exemplifies how

thought is not only packaged inside the individual's mind, but is distributed

among subjects, objects, and artifacts, where tools mediate human activity and

help in the executive functions of thought. The perspective of TFC embodies an

educational socio-constructivist vision where learners build their knowledge

with these tools, taking advantage of their affordances. It is the concept of

learning "with" technology instead of the traditional vision of

learning "from" technology, where technological applications are

limited to providing information and evaluating the students' responses.

Finally, we describe Generative AI applications as HPC following David

Jonassen's pragmatic and pedagogical criteria, i.e. the capacity of knowledge

representation of different subjects, the facilitation of critical and

meaningful thinking (based on questioning and prompting) and how they enable

complex thinking among students when used in learning tasks, only when

executive functions are on the side of the learner.

RESUMEN

Las aplicaciones de IA

generativa permiten funciones útiles para el aprendizaje basadas en la

generación de contenido. Este artículo ofrece un marco teórico para entenderlas

como herramientas para la cognición (HPC), basado en la perspectiva de la

teoría sociocultural, la teoría de la actividad y la cognición distribuida.

Esta perspectiva ejemplifica cómo el pensamiento no sólo está empaquetado

dentro de la mente, sino que se distribuye entre sujetos, objetos y artefactos,

donde las herramientas median la actividad humana y ayudan en las funciones

ejecutivas del pensamiento. Encarna una visión en la que los alumnos construyen

su conocimiento con ellas, aprovechando sus posibilidades de acción. Es la

concepción de aprender "con" la tecnología en lugar de la visión

tradicional de aprender "de" la tecnología, donde las aplicaciones

tecnológicas se limitan a proporcionar información y a evaluar las respuestas

de los estudiantes. Finalmente, describimos las aplicaciones de IA generativa

como HPC siguiendo los criterios pragmáticos y pedagógicos de David Jonassen, como la capacidad de representación del

conocimiento, la facilitación del pensamiento crítico y significativo (basado

en preguntas y prompts) y cómo permiten el

pensamiento complejo entre estudiantes cuando se utilizan en tareas de

aprendizaje, solamente cuando las funciones ejecutivas las realizan ellos.

PALABRAS CLAVES· KEYWORDS

Artificial intelligence;

cognition; learning processes; critical thinking; computer uses in education

Inteligencia artificial;

cognición; procesos de aprendizaje; pensamiento crítico; usos de los

ordenadores en educación

1. Introductión

Generative AI (hereinafter, GAI) applications have an

increasing interest in educational research and are presented as an opportunity

for personalizing learning, as a means of personal assistance, and as cognitive

supports for higher order thinking, but also as a source of ethical problems

and biases, lack of academic integrity, privacy issues and dissemination of

false information (Crompton & Burke, 2024; Mishra et al., 2024; Walter,

2024).

According to UNESCO, GAI is " (...) an artificial

intelligence (AI) technology that automatically generates content in response

to prompts written in natural-language conversational interfaces" (Miao

& Holmes, 2024, p.8). Moreover, it uses various AI technologies to create

content across various media formats (Schellaert et

al., 2023). This capacity to generate content that is plausible to people is

what makes GAI different from past AI technologies, along with the social

dimension that derives from its own interface: that is based on natural

language, and we communicate with it through chatboxes

or directly with our own voices. It appears as a human agent with whom users

relate using such a human feature as language (Author, 2018; Mishra et al.

2023).

There are several GAI applications that enable

different useful functions in education based on the generation of content

(such as ChatGPT, Copilot etc.), either to create, structure, synthesize,

reformulate texts or ideas, by students and/or by teachers, individually and/or

collectively. There are GAI applications that guide us in the search of

literature (Perplexity), in summarizing content (Scribe, Claude) in breaking

down complex tasks in steps (Goblin Tools), that help with code writing (GitHub

Copilot, AlphaCode), generate content in multiple

media (DALL-E, Sora, Synthesia), among others.

These GAI application affordances have been appraised

in the educational field. For instance, a UNESCO report from April 2023

identified its use in Higher Education as an aid in refining ideas, as an

expert tutor, etc., for students’ learning and teaching (Sabzilieva

& Valentini, 2023). Crompton and Burke's (2024) systematic research review

identified teachers' uses such as for teaching support, task automation, and for

student learning such as accessibility, explaining difficult concepts, acting

as a conversational partner, providing personalized feedback, providing writing

support, self-assessment, and facilitating student engagement and

self-determination.

Given the importance they have in the current

educational debate, this paper aims to offer a theoretical framework to

understand the GAI applications as cognitive

tools, mindtools or tools for cognition (TFC from now on)

-which stems from the vision of Gavriel Salomon, Roy D. Pea, Howard Rheingold,

David Jonassen, among other introducers of the concept at the end of the 20th

century-, framed in a perspective of sociocultural theory, activity theory and

distributed cognition. Eventually, theoretical frameworks for the integration

of GAI in education are needed to scaffold research efforts that can help

evolve these theories of AI in Education (Dawson et al., 2023), but they should

also structure a view of education that places the student at the center of the learning process, that enhances his/her

agency, autonomy in the executive functions of cognition and critical thinking,

and not to become just an artificial teacher or a tutorial-based educational

system. This, we find, would be a limiting perspective. We intend to provide

theoretical knowledge that informs how to effectively integrate GAI

applications in our educational practice. The awareness of educational

philosophies that help shape our own views provides us with a consciousness of

the best technological choices for the greatest outcomes for our learners. This

is what underlies our views of education and technology and what brings

coherence and consistency to them (Kanuka, 2008).

2. Learning with technology: the

perspective of TFC in education

The use of digital technologies in education for

enhancing teaching and learning processes has been studied since their

appearance and dissemination. Derry and Lajoie (1993), Salomon et al. (1991)

and Jonassen (1996) distinguished two ways of technology integration in education: learning from

technology and learning with technology, and as simple it may seem, the

difference is huge between these two prepositions. With the first computers, Computer-assisted

instruction (CAI) and Intelligent tutoring systems (ITS) were developed. They

followed behaviorist approaches (based on

stimulus-response and reinforcement of behavior), and

cognitivist approaches (considering how our thinking process is related to

working memory, long-term memory, schemata in our previous knowledge, memory

retrieval, and elaborated feedback and personalization of learning). This

learning approach is based on the learning from technology approach,

where technology has the role of a teacher who gives the input and the feedback,

accordingly, reproducing the traditional education approach -teacher-centered-, where the students' learning agency is low.

On the other hand, the learning with technology

approach is learner-centered, where the student uses

technology as a tool, aimed at doing something with it, not as a tutor. Thus, from

this perspective, cognition is on the side of the learner, who takes advantage

of the digital tools' affordances to do something with the tool that would not

be possible (or at least it would be harder) without it. This perspective is

rooted in the constructivist approach of teaching and learning where teachers

design student-centered activities with preestablished

pedagogical aims in which students have to construct their own learning.

According to Iiyoshi et al. (2005), this is carried

out through five different cognitive processes: information search, information

presentation, knowledge organization, knowledge integration and knowledge

generation. Derived from this, teachers can also adopt a constructionist

perspective, where kids' activity is oriented to the creation of artifacts,

unfolding their creativity (Papert, 1982), or

socio-constructivist approaches of learning, where learners interact actively

with the environment and with their peers and their educators to construct

their learning.

3. Sociocultural theory as an overarching theory

for TFC

The learning with technology perspective is

also rooted in sociocultural theory, created by Vygotsky, Leontiev and Luria during the 1920s in the Soviet Union. It

assumes that the historical development of human culture is different from

human biological evolution because it has its own rules (Vygotsky, 1978).

Cultural development is based on the use of tools, created by humans, to act on

the environment. They can be physical tools (e. g., a hammer or a screwdriver),

but also symbolic tools (e.g., language). Symbolic tools are decontextualized

from the environment – nature, biology – since the symbols they manipulate are

increasingly less dependent on the space-time context in which they are used. A

person without tools, only considering his/her biological evolution, cannot

evolve; without tools, the qualitative cognitive leaps that Vygotsky relates to

the transition from elementary cognition – that which is related to the primary

and natural – to higher cognition – the "cultural" and the social –

do not occur (Wertsch, 1995).

This influence also has an effect on building new

tools that will affect the physical and cultural environment that will, in

turn, also affect culture. This means that these tools make us, and they are

not just a matter of the present time, but they come from our ancestors, and

they will have an impact on the future. It focuses on activity as a culturally

mediated action (Engeström & Sannino, 2021). From

this perspective, the question revolving around whether to use or not to use

digital tools in our human activity -and here we really mean "in

education"-, is a false debate as tools are part of us. Without human

beings there is no human culture, but without culture -and cultural tools-

there are no human beings as we know them.

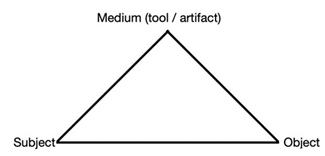

Sociocultural theory is based on culturally mediated

action and is normally represented in the form of a triangle with three actors

in each vortex (see Figure 1): In the lower part, the Subject and the Object,

there is no mediation. The Medium in the upper vortex is the artifact

(the tools) that mediates the subject's action on the object (the environment,

the other subjects). This is a representation of what is often called the first

generation of Activity Theory (Engeström &

Sannino, 2021).

Figure 1

The triangle of culturally mediated action

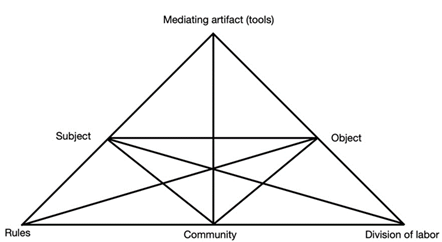

In a second generation of Activity theory, the

interactive triangle in Figure 1 was expanded to represent Leontiev's

(1978) idea of activity (not just a sole action), which helps represent a

larger mediation (Cole & Engeström, 1993). In Figure

1, the interaction is based on the individual action of the subject, mediated

by the Medium, but in the second generation (see Figure 2), the main unit of

analysis is the activity, that involves a community (the social dimension),

carried out following a set of rules (on the left side). In the right vortex of

the base, we find the "division of labor"

where the activity is divided between participants and tools (Bakhurst, 2009).

Figure 2

A representation of the second generation of Activity

Theory

What we want to stress is that this mediation places

people's cognition within social and cultural contexts of interaction and

activity (Salomon, 1993). We can say that this triangle is a map of a

distributed cognition, between people, community, and cultural tools and

artifacts. In this action system (Figure 1) and activity system (Figure 2), our

mind is distributed with the tools -creating a distributed cognition- that we

use to act on the environment (Cole & Engeström,

1993). This is why it is important to understand how these tools can offer

different forms of mediation, and GAI applications can have a role in human

action and collective activity as other digital tools also have.

4. Distributed cognitions with tools

With the first computer applications it was possible

to imagine a collaboration between users and digital tools for a better

performance (Salomon, 1993). These tools help us in tasks, guiding the activity

and augmenting our capabilities, but also affecting our cognition, when we

internalize these new forms of action (Vygotsky, 1978). The concept of

distributed cognition was studied from different perspectives (see Salomon,

1993), and it implies that the activity needs to adapt the means to the ends. It

leverages the tools' affordances, which are the actual and perceived properties

of tools (Gibson, 1979), but more particularly as possible actions that users

can develop with tools. It is not just what the user perceives in a tool that

will allow certain operations in a social activity or in an individual action,

but all the possible actions we could undertake with a tool (Norman, 1999) (for

instance, with GAI applications). Affordances are not always evident and therefore

must be learned (Gibson, 1989).

It is worth mentioning that these tools contain an

intelligence within their designs, what Lave (1988) describes as

"mythical" artifacts because they are already a part of our

consciousness and we are not aware of what they are, they become invisible,

like a speedometer in a car or a house thermostat. According to Dubé &

McEwen (2017), when we are using tools, we perceive the symbols for the

communication of affordances (for example, identifying a chatbox,

a history log in certain GAI applications), the actual affordances themselves,

and affordances that may be perceived by users based on their cognitive

abilities.

As Clark and Chalmers (1998) described, there is an

active effective externalism of our cognition where the environment has an

active role in our internal cognitive processes. When we use digital tools to

manipulate information through their options, they afford cognitive processes,

augmenting our cognitive capacities, such as when a player rotates Tetris

figures to make them fit in the spaces using the videogame affordances and not

just by rotating them mentally. The rotation affordance lets the player visualize

more quickly the action to be undertaken than if it had to be done just

mentally. As described by Kirsh and Maglio (1994), these are the

"epistemic actions", that help uncover information that is hard to

process and which contribute to simplify problem-solving tasks. They are

different from pragmatic actions, which help reach a physical goal.

Technologies are part of activity systems (Scribner

& Cole, 1981), so when taking GAI applications into account, we must elicit

the activities they afford. The nature of the activity will affect the

cognitive processes, not just the tool per se (Salomon & Perkins, 2005).

Sharples (2023) identified possible interactive and social learning uses

between students and GAI applications, e.g., to explore possible scenarios, as

a Socratic "opponent", as a co-designer, as a help for data or

information interpretation, and so on.

When performing an activity, cognition may be

distributed between the person and the tool, and here we find two quite

different perspectives (Salomon et al., 1991). Firstly, the systemic one, which

is an aggregated performance of the person-tool (this is Pea's position). Distributed

cognition is not a matter of sharing or reallocating intelligence between mind,

context, and tools, but of stretching intelligence during the activity (Pea,

1993). Secondly, the analytic perspective considers the specific contributions

of the person and the tools (that would be Salomon's choice), where the person

has a predominant role, most importantly because the tool really does not

understand anything during the distributed cognition, just the individual does.

When talking about GAI applications such as ChatGPT, this has been a critic

from the beginning as Chomsky et al. (2023) have pointed out. The system does

not understand language, so it is not real intelligence that is stretching over

between person and tool. But it does not mean that these tools are not helping

in off-loading a cognitive load in a task and that the tools themselves have

intelligence of a social origin, as cultural tools.

During a cognitive partnership, the person will have the

effects of using tools in the cognition, at least in three forms: the effects

with technology, where using a technology enhances the cognitive processing

and performance (this is an augmentation), the effects of technology

where technology use leaves a "cognitive residue in the form of improved

competencies, which affect subsequent distributed activities" (Salomon,

1993, p.123). And finally, we find the effects through

technology, where technology use does not just augment our intellectual

processing capacity, but it also reorganizes it (Salomon & Perkins, 2005).

David Perkins advocated for the distributed cognition

perspective with the concept of the "person-plus" as opposed to the

"person-solo" when dealing with tasks and activity. The

"person-plus" takes advantage of the tools that help with the

cognition, just as a student's learning is not just in what is inside his /her

head but also in the "student-notebook" system (Perkins, 1993).

When dealing with collaboration between humans and

artificial agents, the concept of integrated hybrid intelligence

emerges. It refers to increased effectiveness in human activity. However, we

find that it only appraises the side of the augmentation of human capabilities

(the quantitative aspect, and not the qualitative one) (Akata

et al., 2020; Järvelä et al., 2023). For instance, according to Holstein et al.

(2020), this collaboration in performance and mutual learning happens for an

augmentation in goals, perception, action, and decision-making. We think it

does not consider how this intellectual partnership happens within an action or

an activity system where interaction is not limited to augmentation but as a

human activity reorganization.

5. Framing GAI apps as TFC

Understanding digital tools as TFC means adopting a

perspective of learning with tools, and not just from them. They

have been called "tools for thought" (Rheingold, 1985),

"cognitive tools" (Pea, 1985; Salomon, 1993), "tools for

cognition" (TFC) (Pea, 1993), "Mindtools" (Jonassen, 1996), and

"psychological instruments" (Kozulin,

2000). In the case of this paper, we chose the term "TFC" as these

tools are not intelligent and because they are tools for a purpose of an

activity, which engage our cognition. "Cognitive tools" would mean

that they have been already created for cognition, but many of these applications

have been created for open purposes, which is the case of GAI apps. They can

become TFC depending on the educational purpose we give them (the learning with

perspective). However, the meaning of the term is abstract and with a diversity

of views (Kim & Reeves, 2007).

There are several classifications of digital tools as

TFC (or mindtools) regarding their nature and the nature of actions they afford

(Jonassen et al., 1998) or the cognitive processes they allow (Iiyoshi et al., 2005). We find Kim & Reeves' (2007)

classification interesting, which considers (1) the kind of knowledge they

process -albeit general, domain-generic or domain-specific-, (2) the level of

interactivity between user and tool, and (3) the kind of representation they

allow -from concrete (isomorphic) to abstract (symbolic)-.

All these classifications are quite dated, and they do

not include GAI apps. The closest thing to AI applications we find in these

taxonomies would be expert systems, but they are different to GAI applications

as they function with student models and scaffold students' learning depending on how they are progressing according to such models. By

contrast, GAI apps are based on content generation and prompting. It may be

similar when users query a data base, but in this case, the database limits

itself to a response that is already found in its memory, whereas the GAI app

queries receive new responses generated automatically live, affording a chat.

In any case, GAI applications can perform executive functions as creators of

content, and students can just sit back and let the application do all the

writing for them. But, as TFC, they must be used in a way where executive

functions are on the side of the learner's cognition. And they have the unique

feature that can be used in general, generic and specific knowledge domains,

and their representation capacity can be either concrete or abstract.

According to Jonassen (1996) there are three basic

practical criteria that TFC (mindtools, in his terms) should have, plus

six pedagogical criteria. We discuss these criteria to frame GAI apps as TFC

below:

5.1. Practical criteria

1. That they are computer-based:

This first feature seems out of date as almost three decades

have passed and digital tools are now pervasive in our daily life activities.

Indeed, GAI apps are digital tools that are based on Large Language Models. We

can access them through cloud-based applications, using computers, smartphones

and tablets.

2. That they are available as (digital) tools:

Normally, GAI applications are available after having

registered to a platform. These tools have taken the form of chatbots with a chatbox interface and allow natural language use. This kind

of interface is usable, ergonomic and very familiar for students as many of the

communication apps are based on such interfaces.

3. That they are affordable for the public domain:

GAI tools are spreading in large numbers (Alier et

al., 2024). OpenAI claims to have the mission to “ensure that artificial

general intelligence benefits all of humanity” (OpenAI, 2024), and despite not

being entirely sure how sincere this intention is, for now they offer some of their

features for free, although others are licensed. This also happens with other

GPT tools, many of them free for teachers and educators.

5.2. Pedagogical criteria

4. Knowledge representation capacity:

GAI apps have the capacity of writing with a plausible

appeal as their special feature. They can summarize, translate, paraphrase in

different styles and speech registers, and generate all kinds of content from

their database, or introduced by the user. People can use them for tasks

related to information, which are very common in educational settings. When

dealing with information, there is knowledge, the representation of it,

retrieval of information and construction (Perkins, 1993).

5. Generalizable to several knowledge domains:

According to Schellaert et

al. (2023), there are three unique properties of GAI applications. First, there

is flexibility in the input-output diversity and multimodal capacity of these

systems. Second, generality, as they can be applied to a wide range of tasks.

And finally, originality, as they afford the generation of new and original

content. They have a knowledge base that can be very wide like the one in

ChatGPT. But many of these apps can be customized adding a knowledge base.

6. They foster critical thinking:

One of the dangers that the educational community has

placed on AI tools is that they can be used in a non-ethical way (Crompton

& Burke, 2024; Sharples, 2023). There is a fear that the student can, and

will, cheat with them and will submit assignments that have been written

directly by GAI applications. If this happens, then, the student cognition engagement

in the task is low (in that the student reviews minimally what the GAI app has

generated) or null. This has nothing to do with critical thinking, on the

contrary, it promotes a superficial processing of information.

But GAI apps have the affordance of promoting critical

thinking when being used as TFC. It is important to point out that the aim is

to engage and enhance the learners' cognition with this partnership. Cognition

engages when learners develop an activity that entails thinking in meaningful

ways, to access, represent, organize and interpret information, helping

students think for themselves, making connections and creating new knowledge

(Kirschner & Erkens, 2006).

To this aim, the use of GAI applications as TFC must

be activity oriented, thus goal oriented. This means that the learner must

provide prompts to the tool and critically refine them to get the best results.

Eager & Brunton (2023) proposed a prompting process that begins with a goal

setting, a specification of the form the output should take, writing the actual

prompt and testing and iterating until a desired output is obtained.

Providing good prompts - prompt engineering- is a

desirable skill for AI literacy and to leverage GAI apps for learning because

it requires a logical chain of reasoning (Knoth et al., 2024). When having to

give the app an input for prompting a response, the student must use simple and

clear language, giving examples to model the desired outcome, provide context,

and most importantly, refine and iterate, when necessary, also maintaining

ethical and responsible behavior (Miao & Holmes,

2023).

It is important to foster AI literacy about prompt

engineering to understand what works best to generate adequate responses, and

this entails thinking critically and creatively. This can be done with zero-shot

prompts -when we ask the GAI application a single and generic prompt to obtain

a generic answer-, or a few-shot prompts -where the user refines the

prompts with examples that the answer should contain until receiving an

adequate answer-. These are input-output prompts, but we can also develop chain-of-thought

prompts where we ask the program to explain the output step-by-step so that it

can be thoroughly assessed and help cultivate critical thinking in education

(Walter, 2024).

Moreover, when prompting, there is a need for the user

to possess a fair amount of content knowledge in order to assess the obtained

responses or outputs, which is in addition to the critical thinking skills required

to verify them and the necessary iteration of the prompt refinement (Cain,

2024; Eager & Brunton, 2023). This is the knowledge (skills, attitudes and

dispositions) required to learn facts, and it is the knowledge base for

critical thinking and creative thinking (Jonassen 1996; Perkins, 1993). Complex

thinking skills such as problem-solving, design and decision-making, which are

executive functions of cognition, can be supported by tools (Perkins, 1993).

7. They afford a transfer of learning:

According to Jonassen (1996), the transfer of learning

is directly related to problem solving, so any thinking that GAI applications

promote facilitates problem solving and transfer of learning. TFC are

generalizable tools and can be used in different settings to facilitate

cognition (Kirschner & Erkens, 2006). GAI apps are not domain dependent, so

a transfer of skills can take place between domains, and prompting to interact

with these systems can be transferred to a variety of knowledge domains

(Walter, 2024).

When we speak of the effects with and the effects of technology (Salomon, 1993), we mean that it is desirable to

achieve a transfer of skills from the cognitive collaboration where the person

is becoming more autonomous with time.

8. They afford a simple, powerful formalism of

thinking:

Using GAI applications as TFC means to engage in

complex activities that promote deep thinking when doing tasks with them and

not just base them on a stimulus-response education, or understanding them as

intelligent agents (with agency). This collaboration is not only based on

augmentation of action, but also on the reorganization of the activity. This is

the case of the study by Nguyen et al. (2024) on the use of a GAI writing tool

for PhD students, where iterations and interactions with the tool showed a

better performance in their writing compared with those who just used the tool

as a source of information. Also, a nursing education study (Simms, 2024) showed

that students could reflect on their questioning, the obtained responses and on

their decision-making in problem-solving (these are executive functions). So,

it contributed to a constructivist meaning making process.

9. They are easily learnable:

GAI applications have a manageable intrinsic cognitive

load that affects learning positively. In fact, when using them in a learning

task, they should help increase the germane cognitive load to improve the

process of acquiring new knowledge in the long-term memory. These tools follow

a familiar interface in the form of a chatbox. There

is not a cognitive load that affects its adoption as a TFC, just the danger of

understanding it as a reliable intelligent agent to learn from. AI literacy

should be added in Teaching Digital Competence frameworks such as Digcompedu (Punie & Redecker, 2017) so that both

educators and students are able to leverage the benefits of GAI applications

and to avoid their shortcomings.

6. Conclusions

We have offered a theoretical framework that places

GAI applications under the perspective of TFC in education. TFC are not a specific

kind of technology but a concept or a metaphor about how to integrate

technology in teaching and learning processes to empower constructivist and/or

socio-constructivist learning, where students use them as tools aimed at learning

with them, establishing a cognitive partnership, and where they have the

main role and agency, not the tool. This is the opposite view of learning from

GAI applications.

Sociocultural theory and activity theory work as an

overarching theoretical context to understand digital tools as media for

distributed activity between people, context, and tools. There is a

distribution of cognition between the person and the TFC so that the person can

go further in a joint system of human(s)-tool(s). Without it, the task could be

hard or even impossible to attain.

GAI applications meet the practical and pedagogical

requirements identified by David Jonassen (1996) to work as TFC. Thus, we have

updated Jonassen's earlier classification of "mindtools" adding these

specific AI tools. They stand out as TFC for critical thinking based on

prompting.

To become TFC, they must be set with a purpose to

reach an objective, some form of motivation for the learning activity. If we

want to leverage their potential in education, we must understand how TFC’s

affordances can promote learning. The TFC perspective redirects educational

practice from the individual without tools, or from the individual using

technology that acts as an artificial tutor (the traditional teacher-centered view of education), to the recognition of the

cognitive collaboration between students and TFC. It is not that we have the

possibility to include their use in education, but we must encourage it for a

future of cognitive partnerships that will equip students with skills (that

will not be person-solo based) that they are going to encounter in their future

professional life (DeFalco & Sinatra, 2019; IFTF, 2017; Perkins, 1993).

Digital tools should only be used for skill mastery

and not for deskilling students (Salomon 1993). TFC should help students think,

not help take over their cognition by just off-loading it, or by doing the whole

job. They should not perform students' executive functions (e.g.,

decision-making) but facilitate deeper thinking leveraging their epistemic

actions (Kim & Reeves, 2007). It is fundamental that when used in

educational settings, teachers design activities that integrate them but

assuring that students will use them to review their inputs and outputs, to

refine prompts and, eventually, improve their critical thinking skills, because

the responses we obtain from GAI applications may be opaque (Bearman & Ajjawi, 2023).

We are still in the early stages of integrating GAI

applications in teaching and learning and of knowing their affordances for

distributed cognition. We need more research to shed light upon these possible

distributed cognitions, to elicit the use of their epistemic actions.

References

Akata, Z., Balliet, D., de Rijke, M., Dignum, F., Dignum,

V., Eiben, G., Fokkens, A., Grossi, D., Hindricks, K., Hoos, H., Hung, H., Jonker, C., Monz, C., Neerincx, M., Oliehoek, F., Prakken, H., Schlobach, S., van

der Gaag, L., ..., Welling, M. (2020). A Research Agenda for Hybrid Intelligence: Augmenting

Human Intellect with Collaborative, Adaptive, Responsible, and Explainable

Artificial Intelligence. Computer,

53(8), pp.18-28, http://doi.org/10.1109/MC.2020.2996587

Alier,

M., García-Peñalvo, F.J., & Camba, J.D. (2024). Generative Artificial Intelligence in Education: From

Deceptive to Disruptive. International Journal of Interactive Multimedia and

Artificial Intelligence, 8(5), pp. 5-14. https://doi.org/10.9781/ijimai.2024.02.011

Bakhurst, D. (2009). Reflections on activity theory. Educational

Review, 61(2), 197–210. https://doi.org/10.1080/00131910902846916

Bearman, M.,

& Ajjawi, R. (2023). Learning to work with the

black box: Pedagogy for a world with artificial intelligence. British

Journal of Educational Technology, 54, 1160–1173. https://doi.org/10.1111/bjet.13337

Cain, W.

(2024). Prompting Change: Exploring Prompt Engineering in Large Language Model

AI and Its Potential to Transform Education. TechTrends,

68, 47–57. https://doi.org/10.1007/s11528-023-00896-0

Chomsky, N.,

Roberts, I., & Watumull, J. (2023, March 8). The

false promise of ChatGPT. The New York Times. https://shorturl.at/gvmOw

Clark, A.,

& Chalmers, D. (1998). The extended mind. Analysis, 58(1), 7–19. https://shorturl.at/wj9RI

Cole, M.

& Engeström, Y. (1993). A cultural-historical

approach to distributed cognition. In G. Salomon (ed.), Distributed

cognitions. psychological and educational considerations (pp. 1-46).

Cambridge University Press.

Crompton,

H., & Burke, D. (2024). The Educational Affordances and Challenges of

ChatGPT: State of the Field. TechTrends 68,

380–392. https://doi.org/10.1007/s11528-024-00939-0

Dawson, S., Joksimovic, S., Mills, C., Gašević,

D. & Siemens, G. (2023), Advancing theory in the age of artificial

intelligence. British Journal of Educational Technology, 54, 1051-1056. https://doi.org/10.1111/bjet.13343

DeFalco,

J.A. & Sinatra, A.M. (2019). Adaptive Instructional Systems: The Evolution

of Hybrid Cognitive Tools and Tutoring Systems. In R. Sottilare,

J. Schwarz (eds) Adaptive Instructional Systems. HCII 2019. Lecture Notes in

Computer Science, vol. 11597. Springer, Cham. https://doi.org/10.1007/978-3-030-22341-0_5

Derry,

S. J., & LaJoie, S. P. (1993). A middle camp for (un)intelligent instructional

computing: An introduction. In S. P. Lajoie & S. J. Derry (Eds.), Computers as cognitive tools (pp. 1-14).

Lawrence Erlbaum Associates. (pp.2-4).

Dubé, A. K.,

& McEwen, R. N. (2017). Abilities and affordances: factors influencing

successful child–tablet communication. Education Tech Research Dev. 65, 889–908 https://doi.org/10.1007/s11423-016-9493-y

Eager, B.,

& Brunton, R. (2023). Prompting Higher Education Towards AI-Augmented

Teaching and Learning Practice. Journal of University Teaching &

Learning Practice, 20(5). https://doi.org/10.53761/1.20.5.02

Engeström Y. & Sannino, A. (2021) From mediated actions to

heterogenous coalitions: four generations of activity-theoretical studies of

work and learning, Mind, Culture, and Activity, 28(1), 4-23, http://doi.org/10.1080/10749039.2020.1806328

Garcia

Brustenga, G., Fuertes-Alpiste, M. &

Molas-Castells, N. (2018). Briefing

paper: chatbots in education. eLearning

Innovation Center, Universitat Oberta de Catalunya. https:/doi.org/10.7238/elc.chatbots.2018

Gibson, J.

J. (1979). The ecological approach to visual perception. Houghton Mifflin Company.

Gibson, E.

J. (1989, July). Learning to perceive or perceiving to learn? Paper presented

to the International Society for Ecological Psychology, Oxford.

Holstein,

K., Aleven, V., & Rummel, N. (2020). A Conceptual

Framework for Human–AI Hybrid Adaptivity in Education. In: I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, E. Millán. (eds) Artificial Intelligence in

Education. AIED 2020. Lecture Notes in Computer Science, vol 12163.

Springer, Cham. https://doi.org/10.1007/978-3-030-52237-7_20

IFTF

[Institute for the Future]. (2017). The next era of human / machine partnerships. Emerging

technologies' impact on society and work 2030. Institute for the Future & Dell Technologies. https://shorturl.at/4DJUD

Iiyoshi, T., Hannafin, M. J., & Wang, F. (2005).

Cognitive tools and student-centered learning:

rethinking tools, functions and applications, Educational Media

International, 42(4), 281-296. https://doi.org/10.1080/09523980500161346

Järvelä, S.,

Nguyen, A., & Hadwin, A. (2023). Human and artificial intelligence

collaboration for socially shared regulation in learning. British Journal of

Educational Technology, 54, 1057–1076. https://doi.org/10.1111/bjet.13325

Jonassen, D.

H. (1996). Computers in

the classroom. Mindtools for critical thinking. Prentice Hall.

Jonassen, D.

H., Carr, C. Yueh, H. P., (1998). Computers as mindtools for engaging learners

in critical thinking. TechTrends, 43(2),

32-35. https://doi.org/10.1007/BF02818172

Kanuka, H.

(2008). Understanding e-learning technologies-in-practice through

philosophies-in- practice. In T. Anderson (Ed.), The theory and practice of

online learning (2nd ed., pp. 91–118). Athabasca University Press.

Kim, B.,

& Reeves, T. C., (2007). Reframing research on learning with technology: in

search of the meaning of cognitive tools. Instructional Science, 35,

207-256. https://doi.org/10.1007/s11251-006-9005-2

Kirschner,

P. A., & Erkens, G. (2006). Cognitive Tools and Mindtools for Collaborative

Learning. Journal of Educational Computing Research, 35(2),

199-209. https://doi.org/10.2190/R783-230M-0052-G843

Kirsh, D. &

Maglio, P. (1994). On Distinguishing Epistemic from Pragmatic Action. Cognitive

Science, 18, 513-549. https://doi.org/10.1207/s15516709cog1804_1

Knoth, N., Tolzin, A., Janson, A. & Leimeister, J. M. (2024). AI

literacy and its implications for prompt engineering strategies. Computers

and Education: Artificial Intelligence, 6, https://doi.org/10.1016/j.caeai.2024.100225

Kozulin,

A. (2000). Instrumentos psicológicos. La educación desde una perspectiva

sociocultural. Paidós.

Lave, J.

(1988). Cognition in

practice. Cambridge

University Press.

Leontiev, A. N. (1978). Activity,

consciousness, and personality.

Prentice-Hall.

Miao,

F., & Holmes, W. [UNESCO] (2023). Guidance for generative AI in education and research. UNESCO

Mishra, P.,

Oster, N. & Henriksen, D. (2024). Generative AI, Teacher Knowledge and

Educational Research: Bridging Short- and Long-Term Perspectives. TechTrends 68, 205–210. https://doi.org/10.1007/s11528-024-00938-1

Nguyen, A.,

Hong, Y., Dang, B., & Huang, X. (2024). Human-AI collaboration patterns in

AI-assisted academic writing. Studies in Higher Education, 1–18. https://doi.org/10.1080/03075079.2024.232359

Norman, D.

A. (1999). Affordance, conventions, and design. Interactions, 6(3),

38–43.

Open AI

(2024, May 10). Open AI. About. https://openai.com/about/

Papert, S. (1982). Mindstorms: children, computers and powerful ideas. Harvester Press.

Pea, R. D.

(1985). Beyond amplification: Using the computer to reorganize mental

functioning. Educational Psychologist, 20(4), 167-182. https://doi.org/10.1207/s15326985ep2004_2

Pea, R. D.

(1993). Practices of distributed intelligence and designs for education. In G.

Salomon (ed.), Distributed cognitions. Psychological and educational

considerations (pp. 47-87). Cambridge University Press.

Perkins, D.

N. (1993). Person-plus: a distributed view of thinking and learning. In G.

Salomon (ed.), Distributed cognitions. Psychological and educational

considerations (pp. 88-110). Cambridge University Press.

Punie, Y.,

& Redecker, C (Eds.). (2017). European

framework for the digital competence of educators: DigCompedu. Publications Office of the European Union. https://doi.org/10.2760/178382

Rheingold,

H. (1985). Tools For

Thought: The History and Future of Mind-Expanding Technology. The MIT Press.

Sabzalieva E. & Valentini, A. [UNESCO] (2023). ChatGPT and artificial intelligence in higher

education: quick start guide. UNESCO.

Salomon, G.

(1993). No distribution without individuals' cognition: a dynamic interactional

view. In G. Salomon (ed.), Distributed cognitions. Psychological and

educational considerations (pp. 111-138). Cambridge University Press.

Salomon, G.

& Perkins, D. (2005). Do technologies make us smarter? Intellectual

amplification with, of and through technology. In R. Sternberg, R. and D.

Preiss (eds.), Intelligence and Technology: The Impact of Tools on the

Nature and Development of Human Abilities (pp. 71-86). Lawrence Erlbaum.

Salomon, G.,

Perkins, D. N., & Globertson, T. (1991). Partners

in cognition: Extending human intelligence with intelligent technologies. Educational

Researcher, 20(3), 2-9. https://doi.org/10.3102/0013189X0200030

Schellaert, W., Martínez-Plumed, F., Vold, K., Burden, J.,

Casares, P., A. M., Sheng Loe, B., Reichart, R., Ó hÉigeartaigh, S., Korhonen, A., & Hernández-Orallo, J.

(2023). Your Prompt is My Command: On Assessing the Human-Centred Generality of

Multimodal Models. Journal of Artificial Intelligence Research, 77,

377-394. https://doi.org/10.1613/jair.1.14157

Scribner, S.

& Cole, M. (1981). The

psychology of literacy. Harvard

University Press.

Sharples, M.

(2023). Towards social generative AI for education: theory, practices and

ethics. Learning: Research and Practice, 9(2), 159–167. https://doi.org/10.1080/23735082.2023.2261131

Simms,

Rachel Cox DNP, RN, FNP-BC. (2024). Work With ChatGPT, Not Against: 3 Teaching

Strategies That Harness the Power of Artificial Intelligence. Nurse Educator,

49(3), 158-161. http://doi.org/10.1097/NNE.0000000000001634

Vygotsky, L.

S. (1978). Mind in

society. Harvard

University Press.

Walter, Y.

(2024). Embracing the future of Artificial Intelligence in the classroom: the

relevance of AI literacy, prompt engineering, and critical thinking in modern

education. International Journal of Educational Technology in Higher Education,

21(15). https://doi.org/10.1186/s41239-024-00448-3

Wertsch,

J. V. (1995). Vygotsky y la formación social de la mente. Paidós.